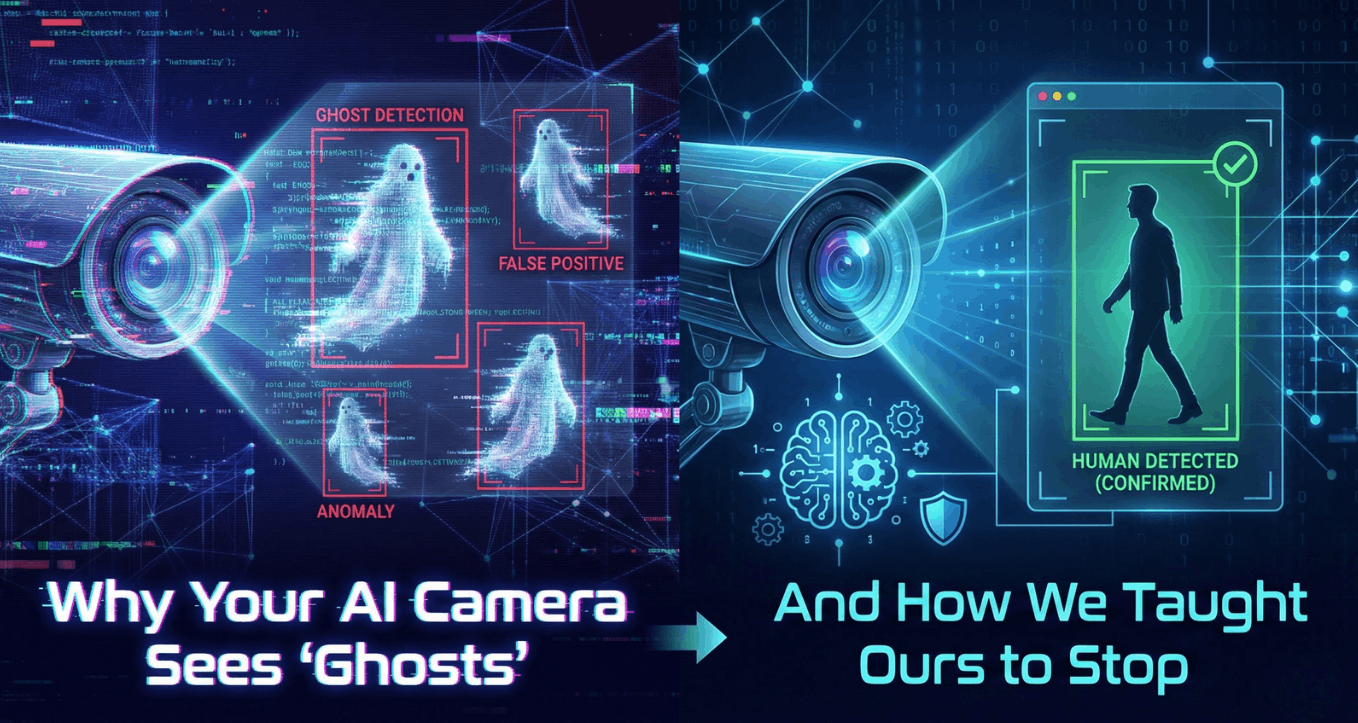

Why Your AI Camera Sees "Ghosts" (And How We Taught Ours to Stop)

Introduction – The "Boy Who Cried Wolf" Problem

If you've ever managed site security or plant operations, you've probably seen this pattern:

Day 1: The AI alerts feel impressive.

Day 7: The team starts muting notifications.

Day 30: A real incident happens… and the alert gets ignored.

That's the modern version of The Boy Who Cried Wolf—not because people don't care, but because too many false alarms train humans to stop trusting the system.

In real facilities, false triggers aren't rare edge cases. They're everyday reality:

- Leaves and branches moving in the wind

- Stray dogs and cats crossing the perimeter

- Shadows shifting in the morning or evening

- Reflections from glass doors, polished floors, or wet ground

- Rain streaks on the lens

- Steam, dust, smoke, or insects near the camera

When these events cause "intrusion detected" alerts repeatedly, the result isn't just annoyance. It becomes an operational risk: your best security tool becomes background noise.

So why does an AI camera "see ghosts"? And more importantly, how do you engineer it to stop?

The Technical "Why"

Why traditional motion detection fails

Many camera systems still rely on motion detection based on pixel-level change:

- Compare the current frame vs a previous frame

- If a large enough area changes, raise an alert

- Often backed by background subtraction (model the "static" scene, then detect differences)

That works in a perfectly stable environment. But most industrial sites are not stable.

Dynamic environments are motion factories

Factories, warehouses, construction yards, and plant perimeters contain constant non-human motion:

- Wind makes trees, flags, hanging cables move

- Steam plumes grow/shrink unpredictably

- Rain introduces fast, noisy patterns across the whole frame

- Low light makes sensors noisy and amplifies flicker

- Headlights cause sudden brightness changes

- Camera compression artifacts create shimmer

Traditional motion detection sees all motion as suspicious. It can't ask: "Is this motion meaningful?"

It only sees: "Something changed."

And "something changed" happens hundreds of times a night.

Section 1: Noise vs Signal Challenge

Here's the hardest reality in vision AI:

Most of what a camera sees is noise. Real security events are the signal.

The difficult part isn't detecting movement. The difficult part is teaching the system:

"This is NOT an intruder."

Why "negative samples" matter (and are hard)

In machine learning, we talk about positive and negative examples:

- Positive: actual intrusions, unauthorized human presence, climbing fences, loitering

- Negative: everything else that looks similar but should not trigger an alarm

The issue is: the "everything else" category is huge and unpredictable.

A stray dog at 20 meters can look like a crawling human at low resolution.

A rain-streaked lens can look like fast movement.

A shadow crossing a wall can look like a person entering.

So the model doesn't just need to learn "human = alert."

It needs to learn "human in the right context = alert."

Background subtraction vs deep learning classification

Think of background subtraction like a very sensitive microphone.

It picks up:

- speech

- wind

- fan noise

- footsteps

- chair squeaks

It's not smart noise filtering—just detection.

Deep learning, on the other hand, works more like a trained listener. It can identify:

"That's a dog."

"That's a person."

"That's steam."

"That's a moving reflection."

Not perfectly, but far better than pixel-change logic.

Still, classification alone isn't enough in real deployment. Because the real world doesn't give clean images.

Which brings us to engineering the system, not just training a model.

Section 2: The Mikshi AI Approach (Solution)

At Mikshi AI, we didn't treat false positives as "minor bugs."

We treated them as the core product problem.

Step 1: Confidence thresholds and sensitivity tuning

Every deep learning detection model produces a confidence score.

A common mistake is thinking:

"Higher sensitivity = better security."

In practice, it's like setting a smoke alarm so sensitive that boiling water triggers an emergency evacuation.

Yes, you'll "catch more," but you'll also drown the team in alerts.

More sensitivity isn't always better because:

- it increases false positives

- it reduces trust

- ignored alerts are effectively missed alerts

So instead of running at "maximum sensitivity," we tune confidence thresholds based on:

- camera angle and distance

- lighting conditions

- environment motion level

- the cost of false alarms vs missed events

This is not a one-size-fits-all slider. It's an engineering decision.

Step 2: Multi-stage filtering (motion → object → verification)

We found the best performance comes from layered decision-making:

-

Motion gating (cheap filter)

"Is there meaningful movement in the scene region?" -

Object detection (smarter filter)

"Is the moving thing a person/vehicle/animal?" -

Verification stage (strict confirmation)

"Does this look like a real human event over time?"

This pipeline matters because it prevents one noisy frame from triggering a full alert.

Step 3: The "secret sauce": Pose estimation + time-based confirmation (not just bounding boxes)

A bounding box is useful, but it's also blunt.

In real sites, a lot of false alerts happen because something looks like a person for one frame:

- a swinging shadow forms a human-like silhouette

- rain streaks create random contours

- a dog at a distance becomes a "person-sized blob"

- steam momentarily forms a shape that triggers detection

If your system fires an alert the moment it sees a box, it will inevitably "see ghosts."

That's why we don't treat a single frame as truth.

Time + frame aggregation threshold (confirmation logic)

Instead of asking:

"Did the model detect something once?"

We ask a stronger engineering question:

"Did it stay real long enough to be real?"

So we apply time-based and frame-based aggregation thresholds, where an object must meet conditions like:

- detected across N consecutive frames (or within a rolling time window)

- consistent tracking ID over time (not flickering detections)

- stable size/location change patterns

- confidence must remain above a threshold across those frames

This eliminates a huge number of alerts caused by one-frame hallucinations, camera noise, and transient lighting effects.

Pose estimation > just bounding boxes

We also go beyond the "human-shaped box" logic using pose estimation.

Bounding boxes answer:

"Something human-like exists."

Pose answers:

"Does this actually have a human structure?"

Example:

A dog may trigger a "human" box at night because it occupies a similar size region…

…but it won't generate a reliable set of human skeleton key-points (like shoulders–hips–knees alignment).

So we can prevent escalation from "motion found" → "intrusion alert."

Client-specific negatives (self-learning the site)

Even the best general model won't know the unique weirdness of every location.

That's why we also support client-specific negative training, where certain site-specific objects or recurring patterns can be added to the "do-not-alert" learning bucket.

For example:

- a rotating fan shadow on a wall

- a steam vent near a gate

- a machine arm that moves periodically

- a specific animal frequently passing through

- reflective surfaces that create repeatable glare patterns

Once these are added as negative samples, the model becomes better at that specific site through self-learning behavior, meaning fewer false alerts without weakening true intrusion detection.

Mini "Before vs After" Story — Mikshi AI in Action

Before Mikshi AI: Alerts everywhere, trust nowhere

One of our early deployments was a plant perimeter camera facing a side gate. On paper, it looked like a perfect setup: clear view, fixed lighting, and a defined restricted zone.

But in real life, the security team started receiving 40–60 intrusion alerts every night, especially between 2–4 AM.

Most of those alerts weren't intrusions at all. They were triggered by:

- stray dogs walking past the gate

- tree branches and leaves moving under floodlights

- shadows stretching and shifting across the ground

- insects near the lens during humid weather

- occasional rain streaks causing sudden motion spikes

Within a couple of weeks, something predictable happened:

the team began ignoring notifications because the system was crying wolf too often.

After Mikshi AI: Fewer alerts, higher trust

Once Mikshi AI was deployed on the same camera stream, the goal wasn't to "detect more", it was to detect smarter.

We applied our full filtering pipeline:

- motion gating to avoid reacting to meaningless pixel changes

- object detection to classify what was moving

- pose-based verification so animals and shadows don't escalate into "human intrusion"

- time + frame aggregation thresholds so a single-frame ghost detection doesn't trigger an alert

- tracking consistency checks so an object must remain stable across time, not flicker in and out

The outcome was immediate and operationally meaningful:

Alerts dropped from 40–60 per night to just 2–5 per night.

Almost every alert now had a clear reason to exist.

Also, importantly, the alerts that remained were the ones that mattered:

- a person walking near the gate outside shift hours

- unauthorized entry into a restricted zone

- loitering behaviour close to the boundary

Same camera. Same site. Same environment.

But after Mikshi AI, the system behaved less like a noisy sensor—and more like a reliable security teammate.

Section 3: Real-World Stress Test

Let's talk about the kind of conditions that break "demo-ready AI."

Scenario 1: Low light + rain + moving headlights

This is a perfect storm:

- Low light increases sensor noise and blur

- Rain creates streaks and specular highlights

- Headlights create rapid brightness shifts across the frame

A simple detector will "see" motion everywhere.

Even some object detectors will throw random boxes for a few frames.

What helped us stabilize results:

- Preprocessing: mild denoising, contrast balancing (without over-smoothing)

- Temporal smoothing: avoid reacting to one bad frame

- Tracking consistency: alert only if an object stays consistent for N frames

- Zone awareness: ignore irrelevant areas (like roads outside the perimeter)

Optional upgrade in some deployments:

-

Thermal integration to confirm human presence when RGB cameras struggle

(thermal doesn't care about shadows or headlights the way visible light does)

Scenario 2: Steam near a factory entry

Steam is especially tricky because it has:

- motion

- volume

- changing edges that can resemble shapes

Instead of treating steam as an object, we treat it as a dynamic texture region:

- high motion but low structural consistency

- no stable key-points

- no persistent track

So it gets filtered before it becomes an intrusion alarm.

What We Improved (Precision/Recall Trade-off)

Security AI always balances two forces:

- Recall: catching true threats

- Precision: avoiding nuisance alerts

The goal isn't "perfect recall at any cost."

The goal is reliable security operations.

Here's what we improved through engineering + tuning:

- Fewer single-frame alerts (more stability)

- Higher confidence human verification

- Better behavior in bad weather and low light

- Reduced false positives from animals and shadows

- More consistent alert quality for security teams

Conclusion: Accuracy Isn't Just Catching Threats—It's Preventing Alert Fatigue

A camera that detects every leaf shadow is not "highly secure."

It's simply noisy.

True accuracy means:

- catching real intrusions when they happen

- minimizing false alarms so humans stay responsive

The practical takeaway is simple:

Don't judge AI surveillance by how often it triggers.

Judge it by how often it triggers for the right reasons.

Because in real facilities, the best system isn't the one that sees the most motion.

It's the one that knows what motion matters.

In this article

- Introduction – The "Boy Who Cried Wolf" Problem

- Background subtraction vs deep learning classification

- Time + frame aggregation threshold (confirmation logic)

- Client-specific negatives (self-learning the site)

- After Mikshi AI: Fewer alerts, higher trust

- Conclusion: Accuracy Isn't Just Catching Threats—It's Preventing Alert Fatigue